2008-11-02 We Enter The Age Of Cyberpunk

That's a "great day", we just enter into the age of Cyberpunk by the recent vote of the French Senate :

The French Senate has overwhelmingly voted in favour of a law that would cut off access to the internet to web surfers who repeatedly download copyrighted music, films or video games without paying.

In other words, the "corporate elite" (or the old and aging musical industry) made a law to cut access to Internet while there are (sometimes, just suspicious or without consent of the Internet user) downloads of "copyrighted content". Hmmm… this looks very close to the story line often used in different cyberpunk stories. To be more precise here is the portrait of cyberpunk societies made by David Brin :

…a closer look at cyberpunk authors reveals that they nearly always portray future societies in which governments have become wimpy and pathetic …Popular science fiction tales by Gibson, Williams, Cadigan and others ''do'' depict Orwellian accumulations of power in the next century, but nearly always clutched in the secretive hands of a wealthy or corporate elite.

We have to be prepared and should start the business to make a "black market" for internet access where all those people excluded from official access can get back access to the network.

2008-10-19 Why You Should Use Identica and not Twitter

Microblogging is now the preferred way to update publicly your life or activities status. The technique is now used widely due to a generation trained with SMS and small text messages (just like the Post-it note fifteen years before ;-). If you plant to put your life instant messages into a microblogging services. I would suggest you to use identi.ca and not Twitter (or any other proprietary microblogging solution like Jaiku). There are two good reasons for doing so.

The first is a philosophical reason. Software must be free but with the huge success of network services where the software become a service. The software itself looks less important and people tend to forget about its availability. That's why as a user, developer or service provider, you must take great care while using, developing or running a network service. The Franklin Street Statement on Freedom and Network Services published by autonomo.us started to look at the issue and defines what's classify a free (as in freedom) network service. In the area of microblogging services, Identi.ca is following the The Franklin Street Statement on Freedom and Network Services. The software running this microblogging service is free (released in the GNU Affero General Public License) and called laconica.

The second is a practical reason. identi.ca runs better. As I'm cross-posting between Twitter and Identi.ca, I have a script to post on both at the same time :

adulau@kz:~$perl micropost.pl "I really like #webpy... no constraint and full freedom to code your application." Posting to Twitter... 500 Server closed connection without sending any data back Posting to Identi.ca... 200 OK

Yes. I have such errors very regularly, identi.ca works as expected but Twitter is just dying. As you can see for having working service, it's not a matter of investment. You can have better services just because you have motivated people and design by constraint. Another interesting part is identi.ca supporting XMPP but on the other hand Twitter has troubles with XMPP. On practical and philosophical side, identi.ca is the winner. Of course, you shouldn't look at the trends. Trends are for marketers not users…

Tags: company startup innovation internet microblogging identi.ca freedom

2008-10-19 Why Should Use Identica and not Twitter

#REDIRECT 2008-10-19 Why You Should Use Identica and not Twitter

2008-09-13 The Corporate World Should Learn From Internet

Contents

The Corporate World Should Learn From Internet

A recent blog post of Alex Schroeder about corporate wikis and my comment on his post, remind me of a post I wanted sometimes ago to make about the difficulty for a corporation to learn from Internet. Internet, beside being a big mess, works often better to manage large volume of information and on-line/distributed collaboration than any other internal company designed software/process. I don't really want to understand why it is the case on Internet maybe due to the "trial-and-error" approach with the continuous feedback (well described by Linus Torvalds in this post about Kernel's evolution). I just want to point some areas where the corporate world could learn from Internet to avoid classical pitfalls.

Start Small And Prototype Early (And Forget All Those Bloody Requirements...)

As nicely and visually explained in the post about corporate Wikis from Alex Schroeder, new software project in a large corporation often start by accumulating all the requirements from the "stakeholders"(in other words : anyone that could be affected by the introduction of the new project). But does it work in practice? From my small experience on the subject, I tend to say "not at all". First, it's usually impossible to find any (free or proprietary) software that meet all the requirements. In such situation instead of throwing away some requests from the "stakeholders", the software project is trying to extend the software to meet all requirements. That generate unrealistic requests like modifying existing software (the famous "small customization"), creating software from scratch, over extending a work flow to match those crazy requirements or worst making a two hundred pages requirements document sent to a software engineer already working on 10 "corporate" projects.

Successful Internet projects often take the opposite approach by starting something small and doing it well. The first release of del.icio.us was simple and clearly focusing on a simple multi-user bookmarking service. But it worked. Various Internet services started very small due to their small asset and money constraint. Starting small with a really focus objective limit the risks of creating a monster that no one want to use. If it fails, you start a new project. On Internet, failure is accepted and welcome. Especially that helps (only helps ;-) to avoid repeating the same failure and experimenting other ideas that could fail or work well.

Companies should look more into this approach of creating very small project, through away "consensus among all the stakeholder" and implement ideas by prototyping early. I know that's not an easy task in the large companies especially changing (read killing) the approval process of companies (often good Wikis don't care about approval as described in Why it’s not a wiki world (yet)) or the hiding failure as default policy and we don't code we just make meetings. Don't take me wrong, I'm not trying to say that everything is broken in companies. But they should start to understand they are not living in island and they can gain a lot from Internet practices inside the companies.

This post is somehow related to a previous stacking idea page about how to better operate a company.

Tags: company startup innovation internet wiki

2008-09-09 Hack Lu 2008

The 4th edition of the security conference hack.lu 2008 is on a good track. The plenary agenda is now on-line with plenty of interesting talks. If you want to join us in this information security delirium, there is also the hackcamp/barcamp. A space where more spontaneous discussion/demo can be held. If you have some crazy ideas or demos that you want to do in information security area, the hackcamp/barcamp is the right place. On the organization side, everything is going well except the standard glitches…

2008-08-19 IPv6 Usage Is Growing

Looking at the recent "article"/post in slashdot about IPv6 claiming that its use is not growing, I do not agree especially when I saw the latest IPv6 statistic from AMS-IX :

The usage is growing, more and more ISP announces their IPv6 prefixes and start to connect their customers. By the way, I will add an AAAA record for my webserver this month. IPv6 is real, working and its usage is increasing. Sure it will take time but it's time to jump…

2008-08-16 Operating Systems Always Need Git and GNU Screen

Following a micro-discussion with Michael about the software that you always need on your favorite operating system, we came to a similar conclusion : Git and GNU Screen are the must. Without them, your favorite free operating system looks useless. Of course, if you want to be ahead and always have the latest version of Git and GNU Screen in your ~/bin, there is a an easy way : Git 1 ;-)

#!/bin/bash

# assuming a cloned repository of GNU Screen in ./screen

cd ./screen

git pull

SCREEN_VERSION=`git-show | head -1 | cut -c8-`

git archive --format=tar --prefix=screen-current/ HEAD >../../down/screen-current.tar

cd ../../down

tar xvf screen-current.tar

cd screen-current/src

sed -e s/devel/${SCREEN_VERSION}/ patchlevel.h >patchlevel.h.tmp

mv patchlevel.h.tmp patchlevel.h

./autogen.sh

./configure --prefix=${HOME}

make

make install

As GNU Screen development is hosted in Git, you can use Git features like commit ID to generate the version. That makes easier to know exactly which version you are running when reporting bugs. I also used a similar approach for Git. And you? What are our favorite two software that make an operating system useful?

Tags : git gnu_screen scm gnu

2008-07-21 Killing Usenet Is A Bad Idea

In a recent news from the EFF, there is an increase to limit the use or block access to Usenet by some ISPs. But NNTP and Usenet can be still useful for new technologies, a nice example of a NNTP server plug-in in a wiki. In such case, you can benefit of Usenet threading using a standard Usenet client or distributing RecentChanges RSS feed in a more efficient way than regularly fetching RSS feeds via HTTP. Old is new and new is old… don't kill the Usenet infrastructure that could support the next interactive business.

2008-06-23 Hardware Random Number Generator Useful

If you have a system machine generating various cryptographic keys, you really need a non predictable state in your entropy pool. To reach a satisfied level of unpredictability, the Linux kernel gathers environmental information in order to feed this famous entropy pool. Of course gathering enough unpredictable information from a deterministic system, it's not a trivial task.

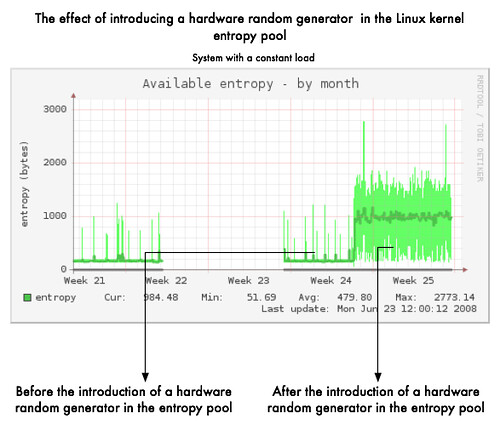

In such condition having an independent random source is very useful to improve unpredictability of the random pool by feeding it continuously. That's also avoid to have your favourite cryptographic software stopping because lacking of entropy (it's often better to stop than generating guessable keys). In the graph below you can clearly see the improvement of the entropy availability. On an idle system, it is difficult for the kernel random generator to gather noise environment as the system is going in a deterministic way while doing "near" nothing. Here the hardware-based random generator is feeding regularly the entropy pool (starting end of Week 24) independently of the system load/use.

If you are the lucky owner of a decent Intel motherboard, you should have the famous Intel FWH 82802AB/AC including a hardware random generator (based on thermal noise). You can use tool like rngd to feed in secure way the Linux kernel entropy pool. In a secure way, I mean really feeding the pool with "unpredictable" data by continuously testing the data with the existing FIPS tests.

That's the bright side of life but I would close this quick post with something from the FAQ from OpenSSL :

1. Why do I get a "PRNG not seeded" error message? ... [some confusing information] All OpenSSL versions try to use /dev/urandom by default; starting with version 0.9.7, OpenSSL also tries /dev/random if /dev/urandom is not available. ... [more confusing information]

If I understood the FAQ, by default OpenSSL is using /dev/urandom and not /dev/random first? If your entropy pool is empty or your hardware random generator is not active, OpenSSL will use the unlimited /dev/urandom version and could use information that could be predictable. Something to remember if your software is still relying on OpenSSL.

Update on 03/08/2008 :

Following a comment on news.ycombinator.com, I tend to agree with him that my statement "/dev/urandom is predictable" is wrong but that was a shortcut to urge people to use their hardware random generator. But for key generation (OpenSSL is often used for that purpose), the authors of the random generator (as stated in section "Exported interfaces —- output") also recommend to use /dev/random (and not urandom) when high randomness is required.

That's true when you are running out of entropy, you are only depending of the SHA algorithm strength but if you are continuously feeding the one-way hashing function with a "regular pattern" (another shortcut). You could start to find problem like the one in the linear congruential generator when the seed is one character… But that's true the SHA algorithm is still pretty secure. So why taking an additional (additional because maybe the hardware random generator is already broken ;-) risk to use /dev/urandom if you have already a high-speed hardware random generator that could feed nicely /dev/random?

2008-06-15 Internet Liberties Still In Danger

Everything started when government tried to limit the liberties on Internet, the first major case was the Communications Decency Act. The famous blue ribbon campaign of the EFF started due to that legal non-sense in 1996. We thought that we were safe from such stupid regulation in the cyberspace when the US supreme court admitted that the Communications Decency Act was mainly unconstitutional. But the history proven the opposite, governments are continuously trying to limit civil liberties on Internet (and not only in China). It's a fact and seeing such intensity from government to limit our rights in a space where freedom is there by nature, I really have a confirmation (by repeating so many times so many legal trick to achieve a complete on control on Internet) that's an intended purpose to limit our freedom space.

Hopefully there are still an active (from scientific to citizen) community where interesting paper came such as : Cassell, Justine, and Meg Cramer. “High Tech or High Risk: Moral Panics about Girls Online." Digital Youth, Innovation, and the Unexpected. An interesting part is the comparison with telegraph and telephone. The conclusion of the paper also showed the danger of the "moral panic" for women :

And in each case that we have examined, from the telegraph to today, the result of the moral panic has been a restriction on girls’ use of technology. As we have described above, the telegraph, the telephone, and then the internet were all touted for how easy they were for young women to use, and how appropriate it was for young women to use them. Ineluctably, in each case, that ease of use and appropriateness became forgotten in a panic about how inappropriate the young women’s use of these technologies was, and how dangerous the women’s use was to the societal order as a whole. In the current case, the panic over girls’ use of technology has taken the form of believing in an increased presence of child predators online. But, as we have shown, there has been no such increase in predatory behavior; on the contrary, the number of young women who have been preyed on by strangers has decreased, both in the online and offline worlds. Finally, as with uses of communication technologies by women in the past, it is clear that participation in social networking sites can fulfill some key developmental imperatives for young women, such as forming their own social networks outside of the family, and exploring alternate identities. Girls in particular may thrive online where they may be more likely to rise to positions of authority than in the physical world, more likely to be able to explore alternate identities without the dangers associated with venturing outside of their homes alone, more likely to be able to safely explore their budding sexuality, and more likely to openly demonstrate technological prowess, without the social dangers associated with the term “geek.” And yet, when moral panics about potential predators take up all the available airtime, the importance of the online world for girls is likely to be obscured, as are other inequalities equally important to contemplate.

But obviously, I'm still very affected by the continuous flow of bad law (like the recent one from France) or action like blocking Usenet. Do they want to turn Internet into an useless medium where free speech is banned ? and an Internet where so many technical restriction implemented, it becomes impossible to use it.